Linear regression (IN PROGRESS)

from pathlib import Path

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

from scipy import stats

from statsmodels.tools.tools import add_constant

from statsmodels.regression.linear_model import OLS

from statsmodels.formula.api import ols

url_path = "https://cdn.jsdelivr.net/gh/LarsHenrikNelson/PatchClampHandbook/data/"Linear regression (IN PROGRESS)¶

Linear regression is one of the most used ways to model data. Linear regression has an equation form of:

where is y is a vector of dependent variable (can also be a maxtrix Y if it is multivariate regression), X is a matrix of independent variables with each column being a feature and each row being a subject, is a vector coefficients, and is the vector of errors. is add to the equation because we can never fully model the relationship between y and X. Linear regression makes several assumptions about . If you look at the equation through the lens of linear algebra is actually the inner dot product between the matrix and vector . is a specific form of the linear equation where there are just two coefficients m and b. Linear regresssion that has more than just one coefficient like is called multiple linear regression. Linear regression can model non-linear and categorical features. Linear regression using least squares which is a minimization method where the is minimized. By minimizing distance between the predicted values and the given values. Minimization uses calculus. In some cases there is a solvable equation and in other cases we just approximate solution.

Ordinary least squares¶

Ordinary least squares (OLS) is probably what you are using when you run linear regression and ANOVA. OLS has the same equation as but has what is called a closed form solution; the solution is solvable with linear algebra with the equation . OLS assumes that a constant change in the predictor leads to a constant change in the dependent variable.

Slopes¶

Slopes are just the regression coefficients without the intercept coefficient. will be a vector of coefficients that is the number of your independent variables + one. The extra coefficient is the intercept. You can actually measure the mean of you data if your independent variables are all 1 so you slope will be 0 and the intercept will be the mean. Not computationally efficient but possible.

Intercept¶

The intercept is the extra coefficient that can be added to the regression equation. You do not need a intercept to fit a linear regression. The intercept is similar to the y-offset that we saw in the curves chapter.

Building a regression model¶

We will go over how to build a regression. We will start with a simple linear regression, cover multiple linear regression, regression with categorical independent variables, polynomial regression and interactions between variables. You can think of categorical and polynomial variables as a type of multiple linear regression.

Simple linear regression¶

Simple linear regression has the equation . and are coefficients which make up the vector of coefficients in the general linear regression equation . You can run simple linear regression three ways in Python; manually or using Scipy and StatModels. We will use Scipy first. After that we will use StatsModels since that will be used for more advanced regression.

Scipy¶

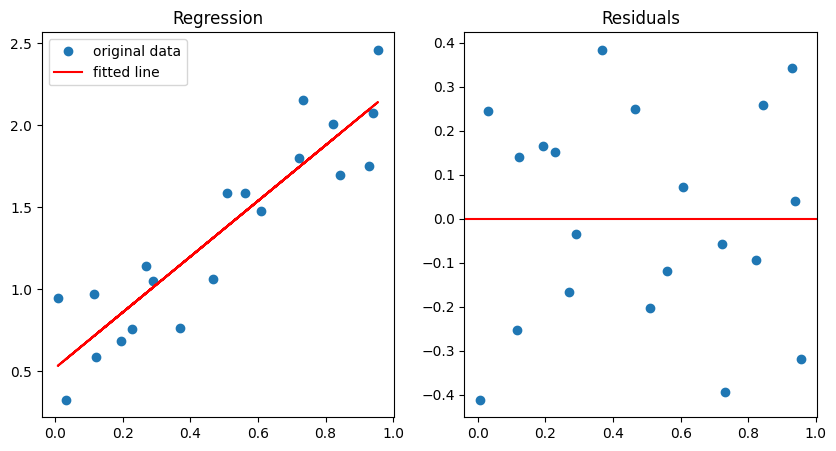

Scipy has a simple interface. I recommend it for any simple linear regression task. However, it does not allow for residual analyis. If you need to 100% ensure your model fits well you should use Statsmodels. You can manually create the residuals but Statsmodels has several helper functions that make it easier. To get the residuals we can just find the predicted point for each x and subtract that from each y. For a simple residual analysis you see the residuals randomly distibutes across 0 when plotted against the independent variable. For simple regression this works but, for multiple regression this will not work because you have many x variables.

rng = np.random.default_rng()

n = 20

x = rng.random(20)

y = 1.6 * x + rng.random(20)

res = stats.linregress(x, y)

y_fit = res.intercept + res.slope * x

fig, ax = plt.subplots(ncols=2, figsize=(10,5))

ax[0].plot(x, y, "o", label="original data")

ax[0].plot(x, y_fit, "r", label="fitted line")

ax[0].set_title("Regression")

ax[0].legend()

ax[1].set_title("Residuals")

ax[1].axhline(0, color="red")

_ = ax[1].plot(x, y_fit-y, "o")

Statsmodels¶

For statsmodels we will fit the regression two different ways; using the formula method and the by hand method. The formula method is easier to use to but can hide some preprocessing.

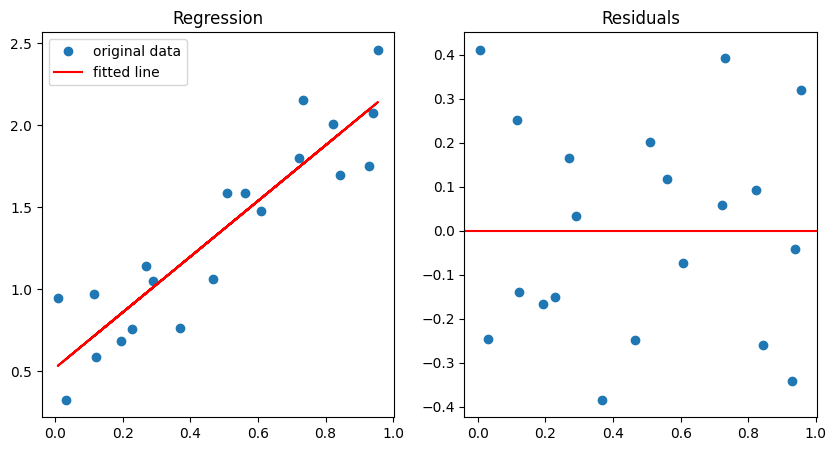

By hand method¶

By hand is really not by hand but we do have to do some prepocessing. If you want to fit and intercept for your model you need to add a column of 1s to the matrix X so that you matrix X will have a column of 1s and a column for independent variable. We add the ones because we are fitting an intercept in our model. Notice how we have to pass our fitted model back to the original OLS model to get the predicted values. We an just grab the residuals from the fitted model rather than computing them manually.

x_const = add_constant(x)

ols_model = OLS(y, x_const)

ols_fit = ols_model.fit()

y_fit = ols_model.predict(ols_fit.params, x_const)

fig, ax = plt.subplots(ncols=2, figsize=(10,5))

ax[0].plot(x, y, "o", label="original data")

ax[0].plot(x, y_fit, "r", label="fitted line")

ax[0].set_title("Regression")

ax[0].legend()

ax[1].set_title("Residuals")

ax[1].axhline(0, color="red")

_ = ax[1].plot(x, ols_fit.resid, "o")

print(ols_fit.params)[0.51978689 1.69839498]

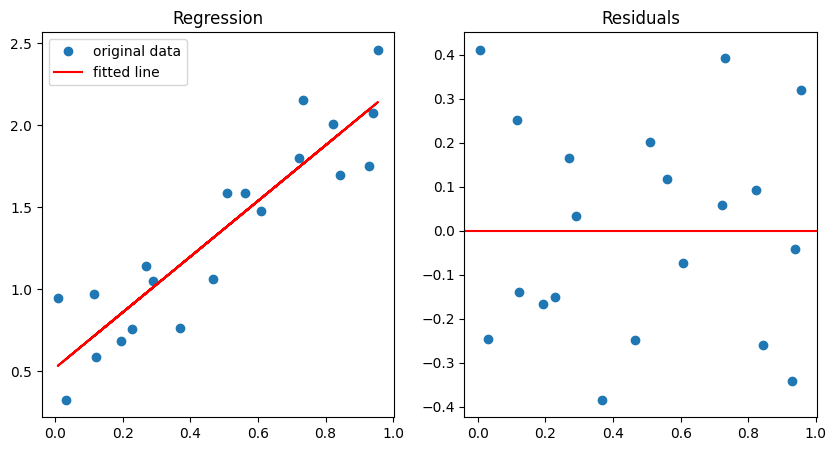

The formula method¶

The formula method is probably the best way to fit models. Python does not natively support formulas like R does so Statsmodels has a package called Patsy that they use to create a formula string which is then processed to create the design matrix which is just a processed version of your depedent variables. Formulas for regression contain the independent variable on the right side and the dependent variable on the left side separated by a ~: "dependent ~ independent". This formula automatically include the intercept implicity like this "dependent ~ 1 + independent".

ols_formula_model = ols("y~x", data={"y":y, "x":x})

formula_fit = ols_formula_model.fit()

y_fit = formula_fit.predict(exog=dict(x=x))

fig, ax = plt.subplots(ncols=2, figsize=(10,5))

ax[0].plot(x, y, "o", label="original data")

ax[0].plot(x, y_fit, "r", label="fitted line")

ax[0].set_title("Regression")

ax[0].legend()

ax[1].set_title("Residuals")

ax[1].axhline(0, color="red")

_ = ax[1].plot(x, formula_fit.resid, "o")

print(formula_fit.params)Intercept 0.519787

x 1.698395

dtype: float64

Summary output¶

You may have noticed that the params of the Statsmodels models only show the coefficients. To get the full fit output we call the summary method. This is very similar to R. You will notice there are a lot of different variables in the formula table that Scipy does not provide. We will go over some of the output. The output can be divide into three sections: model results, cofficient results and some general information about the data.

formula_fit.summary()Model results¶

R-squared: where are the predicted values and \bar{y} is the mean of the dependent variable (y). R-squared is tells you how good the model fits where 0 is does not fit at all and 1 is a perfect fit.

Adj. R-squared: where n is the number of dependent variables and k is the number of features (columns of x + 1 unless you already included the constant). Adjusted R-square penalizes models with lots of features.

Df residuals: The degrees of freedom of you fit which is used use along with the F statistic to find the p value. Df residuals is the number of samples - features (including the intercept). You will never have a model with Df = 0. This is because you need other methods to fit and over determined model.

AIC (Akaike information criteria): AIC is used to score regression models. The smaller the number the better the model. AIC also penalizes models with lots of parameters. When creating models it is best to compare different models and find the model with the lowest score.

BIC (Bayesian information criteria): BIC is used to score regression models. The smaller the number the better the model. Similar to AIC, BIC penalizes the number of model parameters just in a different way than AIC. When creating models it is best to compare different models and find the model with the lowest score.

Coefficient results¶

There will be a row for the intercept and each coefficient in your regression. The number of coefficients can be large if you have polynomial features and categorical features which we will cover in the next part of the chapter. For an OLS model (not necessarily for GLM or mixed models) each coefficient will have several variable which are defined below. Coefficient value: The coefficient value is the actual value of the coefficient in the equation. std err: Standard error of the coefficient. t: The t value for the coefficient P>|t|: The p value for the coefficient. This tells you whether the feature or variable has a signicant effect on the regression curve. [0.025 0.975]: The next two columns are the lower and upper confidence interval for the coefficient. This can tell you have “strong” or how “big” the p value actually is. For example, a confidence that overlaps with zero usually means

Linear regression with nonlinear features¶

There are a couple ways we can fit nonlinear features with standard OLS. One is to just transform the features and the other is to run an polynomial regression. For both of these we will use Statsmodels and the formula method.

Polynomial regression¶

Polynomial is pretty simple in essence but more complex in reality. You can make any feature polynomial be multiplying it by itself one or more times. If you have a column of values just create a new column by multiplying it by itself.

ANOVA¶

ANOVA just pools the effects of of multiple coefficients in the linear regression model when you have categorical variables. For example, if you have a categorical variable treatment with three groups you end up two regression coefficients, each with their own p value. However, we generally want a single p value for our categorical variable so ANOVA pools the variance estimates and derives a F value with degrees of freedom to derive a p value.